The democratization of Artificial Intelligence (AI) makes it easier for organizations to transform their business with AI. It wasn’t that long ago that applying AI to transform a business required a lot of technical expertise and hiring resources from a scarce talent pool. Let alone the expensive infrastructure to achieve such a feat. And something that only larger organizations can afford and justify as they are working on solving significant problems.

What does the democratization of AI really mean? It means there is a maturing AI ecosystem lowering the barrier of entry into the world of AI transformation. The AI ecosystem helps reduce the complexity but doesn’t eliminate it. AI is about continuously learning, and it only makes sense that we transfer the knowledge from existing AI solutions into helping solve new problems.

There is no need to reinvent the wheel if we don’t have to. That is true for similar problems, but there are many problems, and the knowledge is not always transferable. Therefore, it is critical that the AI ecosystem continues to improve to accelerate further AI transformation across all verticals. Additionally, the AI models are rapidly growing in complexity and size, demanding more capable infrastructure.

The complexity of an AI model is defined by the amount of machine learning parameters needed to create an AI model. Doubling a model’s machine learning parameters results in an exponentially longer processing time considering the same hardware.

Let us look at GPT (Generative Pre-trained Transformer) to put the fast-growing complexity in perspective. The GPT models represent prediction language models produced by OpenAI, an AI-based research laboratory. GPT will return a text completion in natural language for any given text prompt like a phrase or a sentence.

The first iteration (GPT-1) released in 2018 had 117 million parameters. A year later, the second iteration (GPT-2) was made available with 1.5 billion parameters. Another year later, the third iteration (GPT-3) grew to 175 billion parameters.

| GPT-1 (2018) | GPT-2 (2019) | GPT-3 (2020) | |

| # of parameters | 117 million | 1.5 billion | 175 billion |

Table 1 – GPT iterations with # of parameters

Additionally, organizations are looking at ways to accelerate the development time of AI models to stay competitive. Therefore, it is essential to stay ahead of the competition with innovative solutions and execute faster than others.

The combination of the exponential complexity and the need for faster results demands an infrastructure that can address those concerns. However, although the infrastructure capabilities are becoming more powerful every year, it isn’t enough to keep up with the increasing complexity of the models.

As a result, vendors offer solutions that consist of many high-performance components such as GPUs completed with a software stack to take full advantage of its compute capabilities. In addition, those solutions aim at delivering a high level of parallelization at scale with maximum efficiency.

AI is about collecting data, analyzing the data, and creating a model representing the data.

Quantity:

The theory is that a significant “quantity” of datasets increases the probability of a more accurate AI model. However, equally important is the “quality” of the datasets. The data must be related to the subject matter and free of errors.

Quality:

Data that doesn’t fit the “quality” criteria can negatively impact the accuracy of an AI model and render it less reliable. Therefore, many AI workflows have a “cleaning” stage that excludes data that doesn’t meet the criteria.

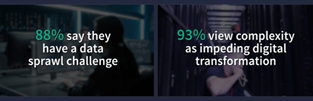

Another critical factor is data management. According to an ESG Data Management Survey from 2021, 88% of the organizations say they have a data sprawl challenge, and 93% see this as impeding their digital transformation journey.

Unfortunately, organizations consistently underestimate the complexity of data management and dramatically contribute to the high failure of AI projects. Therefore, data management should be at the core of any data-centric approach.

AI is a journey, not a destination. An AI model is an iterative process that introduces new data to improve the model. The highest data quality is the data that interacts with the current model. Feeding the resulting data back into the AI process creates a feedback loop that optimizes the model over time.

A traditional development method such as the waterfall methodology doesn’t work for AI. There is no efficient loopback mechanism that allows for continuous improvements and lacks flexibility. Instead, AI is about experimenting and learning from what went wrong and adapting. As they say, fail fast and fail often.

The AI world is a fast-moving world, and the ability to adapt is a must for survival in this competitive landscape.

Many organizations struggle going from development to production. It is crucial to have an end-to-end strategy that automates this process and can do so in a reproducible fashion.

The ability to reproduce an existing model exactly is an essential part of the iterative process. A standardized process also helps identify the datasets used in making a model.

A common question regarding ethics and the bias of a model is data lineage. It describes where the data comes from, where it is used, and who uses it. Do we have the ability to understand if an AI model is ethical or biased in some way?

MLOps is built on top of the principles of DevOps and aims at supporting end-to-end deployment methodologies targeted for Machine Learning. A maturing discipline that standardizes and streamlines the life cycle management of AI.

It brings data engineers, data scientists, businesspeople, and other team members together into a streamlined process. Pipelines are defined to ensure a modular design and traceability of each step of the process.

Finally, MLOps enables continuous integration (CI) and continuous delivery (CD) to provide an end-to-end reproducible experience.

I am a co-host of “Utilizing AI”, a weekly podcast focusing on AI in the Enterprise. In the podcasts, we discuss topics such as the democratization of AI and many others at length. To learn more about the podcasts, follow this link.

Originally published on HPE Connect-Converge.