This article was first released as part of the April 2021 Intel announcement on GestaltT and a follow-up article on Connect-Converge.

Recently Intel announced a new Xeon platform as the “only data-center processor with Built-in AI acceleration”. It will enable Artificial Intelligence (AI) everywhere, from the Edge to the Cloud. This blog looks at the announcement from an AI perspective focusing on the new HPE servers with the 3rd Gen Intel Xeon scalable processor.

The same day HPE released its upgraded portfolio of servers, enabling the latest Intel platform’s new and improved AI capabilities.

The HPE servers that are available with the new Xeon platform are:

- HPE ProLiant DL360 & DL380

- HPE 480 Synergy

- HPE Apollo 2000

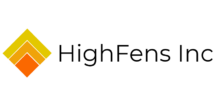

The Intel & HPE announcements go beyond the new Xeon. Some updates and improvements are the system memory with more channels and bandwidth, increased bus throughput, more DIMM channels, and Optane Persistent memory. Many of the improvements play right into the AI playbook.

Figure 1 provides an overview of the announcements with data points.

AI

An AI application’s performance is driven by how efficiently all the hardware components (processing, memory, network) work together and the ability for the software to take full advantage of the underlying hardware. I was pleasantly surprised to see that the announcements touched on all those facets.

The new HPE servers are now more than ever enabled to handle the most challenging AI workloads. It is essential to review “why” the Xeon is the right solution for AI and “how” Intel has addressed the challenges that come with AI workloads.

Why Xeon?

Let us first have a look at why Intel© believes Xeon for AI. The three main reasons are:

Simplicity

People are already familiar with the Xeon architecture and the Intel© ecosystem. It makes it easier to get up and run with AI and reduces the complexity of dealing with other architectures from accelerators such as GPUs.

Productivity

Intel© has put a lot of effort and resources into expanding its software ecosystems with partners and optimizing end-to-end data science tools. The Intel© ecosystem allows developers to build and deploy AI solutions faster with a lower TCO benefit.

Performance

The performance is obtained by maximizing the synergies between hardware and software. First, the processors received new AI capabilities and were additionally tuned for a wide range of workloads. Secondly, Intel©-optimized software extensions enable applications to take full advantage of the processor’s capabilities.

Hardware AI acceleration

When Intel© talks about “built-in AI acceleration”, they refer to the Intel© Deep Learning Boost (aka DL Boost) technology.

Even the most straightforward Deep Learning workflow requires the execution of billions of mathematical operations. It is not difficult to imagine that there could be significant performance improvements if we could optimize those operations. Some operations require only 8-bits of floating-point precision, while others require 16-bits or even 32-bits. The more bits are involved in multiplication, the longer it takes to execute.

DL Boost’s built-in power can perform multiplications at a lower precision while still returning results at higher precision. The impact is that more multiplications can occur in the same amount of time. Considering this happens billions of times, the acceleration gains quickly add up.

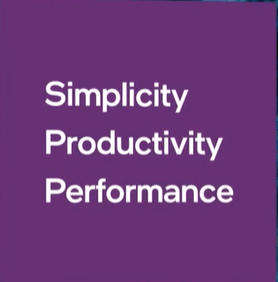

The latest iteration of DL Boost shows up to 1.74x performance improvement against the previous generation. Figure 2 depicts the support for DL Boost across generations with more improvements to expect in the future.

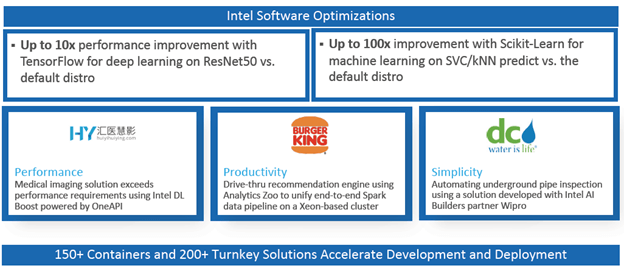

Software Optimization

To get the most out of hardware innovation, you need to have software integration. It is a real challenge to develop efficient pipelines that get you from the data processing stage to model training and finally deploy the model—the ability to consolidate the different stages into a single platform results in improved productivity and scalability.

The frequent innovations in AI make it difficult for developers to keep up with all the changes. A supported software ecosystem provides an abstraction layer allowing developers to stay focused on solving their problems.

Intel understands the need for software optimizations and has a rich ecosystem of AI tools available to its customers. There are too many tools to list them all (figure 2), but the following tools are worth a closer look.

- Analytics Zoo delivers unified analytics and an AI platform for distributed TensorFlow, PyTorch, Keras, and Apache Spark.

- For customers who want a Python-based toolchain, Intel© has the oneAPI AI analytics toolkit.

- Developers looking to deploy pre-trained models from Edge to Cloud should look at the OpenVINO

Summary

The latest Intel & HPE announcements outline that AI applications, from Edge to Cloud, are the future. There are two main parallel tracks to increase their market share. The first track is to consistently deliver innovative hardware to address the many AI challenges with built-in acceleration. The second track is to keep investing time and resources in building ecosystems that provide software optimization. This combo makes the Xeon a true AI workhorse and an AI enabler for their customers. Additionally, it is a solid strategy to build on towards the future.

Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries.

HPE, the HPE logo, and other HPE marks are trademarks of Hewlett Packard Enterprise Development LP or its subsidiaries.