Data at large

Every day, new users join a growing group of consumers of Artificial Intelligence (AI) applications. Innovative applications such as voice assistants, self-driving cars, smart cities are all contributing to the democratization of AI. The amount of data generated from those applications, as well as wireless devices such as phones and tablets, is staggering.

Where does all that data go?

Usually, data is moved to a “centralized” datacenter to be analyzed, such as a public cloud. However, this approach is not always efficient and can take a long time to provide the consumer with a response. It certainly is not scalable or cost-effective as the size of the network infrastructure needs to scale up with the amount of data to be analyzed.

A more efficient and scalable solution is to use a “distributed” model that analyzes the data close to where it was collected. In other words, the “distributed” model computes the data at the edge, aka Edge Computing.

In this blog, I will discuss how Edge Computing enables us to keep up with the growing demand for AI.

The Edge

The term “edge” originally comes from the network world and identifies the point where traffic comes in and out of a system.

There is a variety of definitions available depending on whom you ask. Let’s have a look at the description of the edge as defined in the “State of the Edge 2020 – A Market and Ecosystem” report.

The presented statements are:

- The edge is a location

- The edge we care about is the last-mile network

- There are two sides to the edge:

- Infrastructure edge: devices and servers on the “downstream” of the last mile

- Device edge: equipment on the “upstream” side of the last mile

Edge Computing

Simply put, Edge Computing is an emerging model following a distributed or decentralized architecture where the data collection and processing happens at the “edge”.

Edge Computing enables efficient processing of large amounts of data as close as possible to the data source(s). It allows smart applications and devices to react in near real-time and with very low latencies. This is critical for AI technologies such as self-driving cars.

IoT

Internet of Things (IoT) usually refers to computing devices connected over a network. The devices share data coming from sensors and have limited local computing capabilities. The definition of IoT is continuously expanding with a growing number of devices made available to the consumer market (thermostats, home security, phones), the enterprise (smart cities, transportation, agriculture), and the military (drones, surveillance).

5G

New commercial networks such as 5G are essential to the success of Edge Computing and IoT. The 5G networks increase the demand for edge solutions by enabling flexible network architectures for very low latency, broadband, and reliable services.

The Edge in numbers

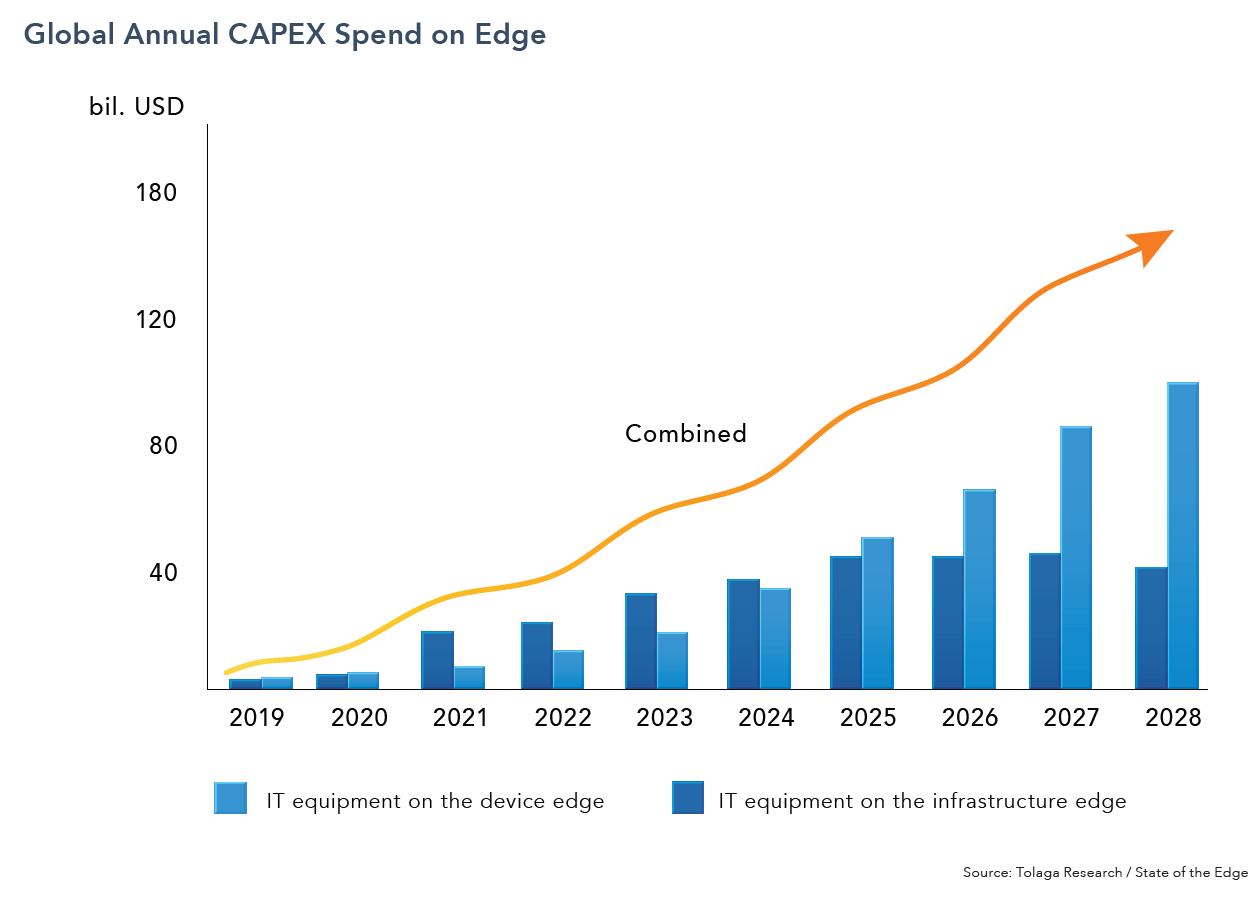

The “State of the Edge” report projects that over $700 billion in cumulative CAPEX will be spent between now and 2028 on edge infrastructure (Figure 1). The projection is a narrow model that focuses on IT infrastructure and data center facilities required to support edge use cases.

Additionally, Gartner states that in 2018 only 10% of the enterprise generated data was created and handled outside a centralized data center or cloud. They predict that by 2025 this number will reach 75% with the help of decentralized architectures such as Edge Computing.

AI at the Edge

Low latency and high resource requirements are what identify AI workloads. The ability to process the data as close as possible to its data-source is needed to satisfy those requirements.

Edge Computing and AI are playing an essential role in today’s digital transformation of the consumer and enterprise markets. With an increasing amount of AI services in the residential, healthcare, automotive, manufacturing, retail markets, and smart cities, we need to get the most out of AI to achieve its full potential.

The powerful combination of Edge Computing and AI is referred to as Edge AI (EA) or Edge Intelligence (EI).

Innovative solutions around edge network automation are needed to jumpstart this effort. New edge solutions will have a smaller footprint, standalone hardware and software, and resulting in sustainable, cost-efficient solutions optimized for space and low power usage.

Centralized vs. Distributed

One way to look at the Edge Computing model is as an extension of the cloud computing model. The main difference is that the cloud is a centralized model. All the data, compute, and network infrastructures are in a central space.

The Edge Computing model pushes computing tasks and services from the network core to the network edge. Edge Computing infrastructure acts as a network of local small data centers for storage and processing purposes.

Merely moving the computing tasks to the edge is only part of the solution. The edge model demands a new architectural approach:

- Infrastructure:

- At the edge, the various devices will have different performance, energy constraints, and form factor.

- Network and devices/sensors need a high level of reliability.

- Network function virtualization (NFV) and software-defined networking (SDN) solutions are needed to support technologies such as 5G.

- Software:

- An agile software methodology provides the flexibility and efficiency needed for a distributed model.

- Deploying a micro-services architecture based on containers along an orchestrator such as Kubernetes makes the development cycle more predictable, resilient, and reliable.

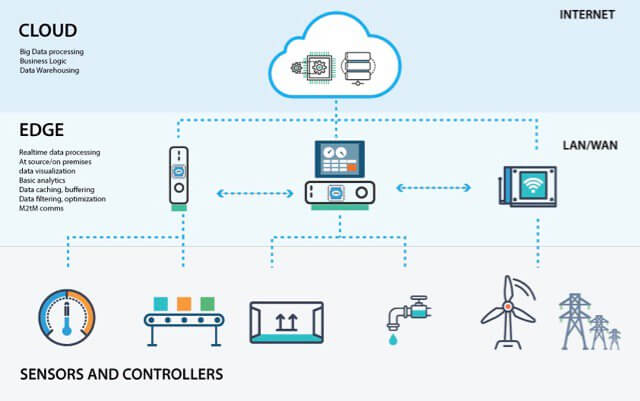

Figure 2 shows an overall architecture with various devices and sensors delivering the data, close to the source, at the edge for real-time processing. Any data processing is decentralized and reduces network traffic significantly. Finally, the cloud can store edge data for a more extended period or archival. Any additional processing or further analysis can be done in a private or public cloud as needed.

Benefits

Some of the benefits of Edge Computing for demanding AI applications are:

- Faster response time:

- The local distribution of computing power and data storage results in lower latency and quicker turnaround.

- Security & Compliance:

- The proximity of the data limits data exposure and reduces the risk of data leakage.

- Additionally, it is easier to implement a security framework that satisfies audit and compliance requests.

- Reliability:

- All the operations are local and make the system less prone to more extensive network interruptions or failures.

- Cost-effective:

- Instead of processing the data in the cloud, the data is processed locally and is more cost-effective.

- There are no extra costs needed to deliver the data to the cloud and require fewer cloud resources.

- Interoperability:

- Devices at the edge can communicate and interact with legacy and newer devices.

- Scalability:

- Expanding edge computing with the use of micro-datacenters can create mini clusters at the edge delivering increased performance and capacity.

Challenges

Edge Computing is still maturing, with many use-cases being dependent on proprietary hardware and software platforms.

There are areas where Edge Computing makes a lot of sense; however, it is essential to understand the possible challenges associated with Edge Computing:

- Network:

- The network requirements for the edge are different compared to a centralized solution.

- Modern network infrastructure and architecture are required to keep the latencies low with high bandwidth.

- Redundancy and business continuity are more challenging to maintain at the edge.

- Security:

- Although Edge Computing limits data leakage, it is more difficult to maintain compliance and security at the edge.

- Geographical:

- The workforce is also distributed and can be a challenge to keep them in sync without proper instructions for maintenance and training.

- Limited resources:

- At the edge, we have limited resources and imply the need for a more efficient software stack as not to waste precious resources.

Summary

Edge Computing is a distributed architecture that delivers compute and data storage closer to the location where the data collection happens with near real-time response times and low bandwidth requirements.

AI and Edge Computing are playing an essential role in today’s digital transformation of the consumer and enterprise markets. Edge AI (EA) or Edge Intelligence (EI) is key to the maturing growth of AI applications demanding low-latency and real-time processing on edge devices.